Kranti Kumar Parida 1,

Siddharth Srivastava2,

Gaurav Sharma1, 3

1IIT Kanpur, 2CDAC Noida, 3TensorTour Inc.

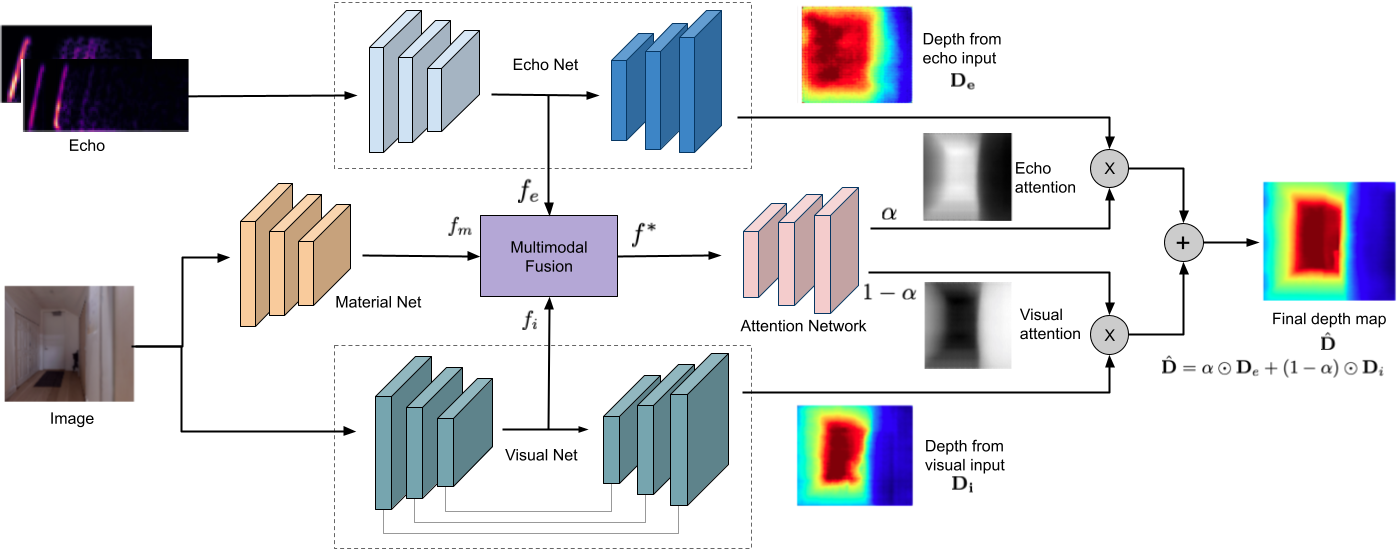

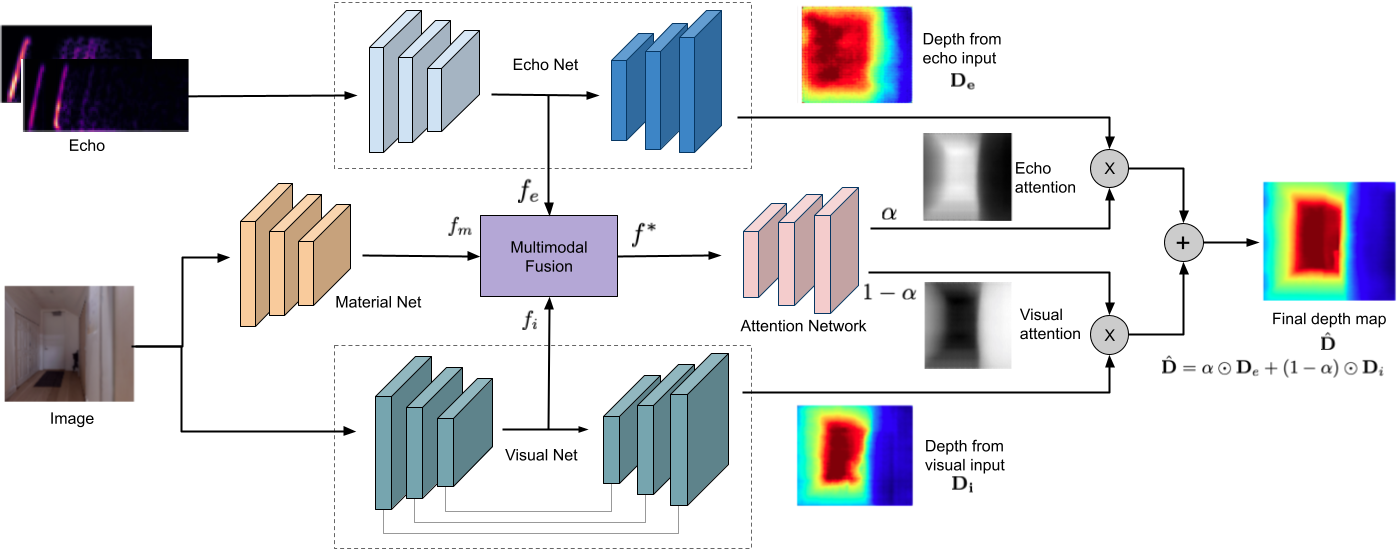

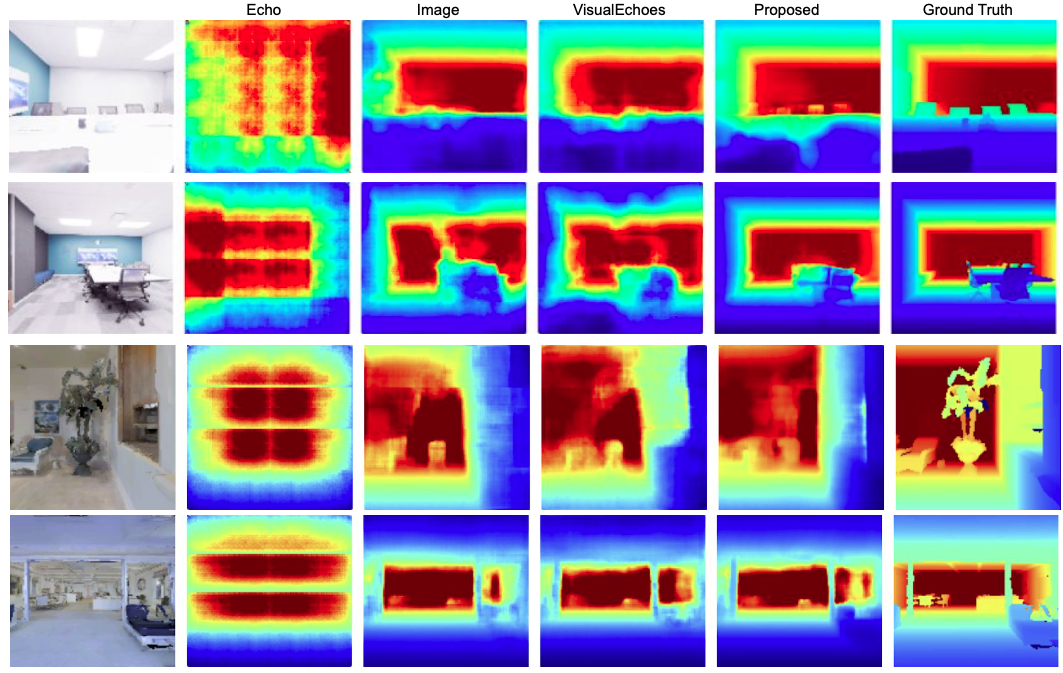

We address the problem of estimating depth with multi modal audio visual data. Inspired by the ability of animals, such as bats and dolphins, to infer distance of objects with echolocation, some recent methods have utilized echoes for depth estimation. We propose an end-to-end deep learning based pipeline utilizing RGB images, binaural echoes and estimated material properties of various objects within a scene. We argue that the relation between image, echoes and depth, for different scene elements, is greatly influenced by the properties of those elements, and a method designed to leverage this information can lead to significantly improved depth estimation from audio visual inputs. We propose a novel multi modal fusion technique, which incorporates the material properties explicitly while combining audio (echoes) and visual modalities to predict the scene depth. We show empirically, with experiments on Replica dataset, that the proposed method obtains 28% improvement in RMSE compared to the state-of-the-art audio-visual depth prediction method. To demonstrate the effectiveness of our method on larger dataset, we report competitive performance on Matterport3D, proposing to use it as a multimodal depth prediction benchmark with echoes. We also analyse the proposed method with exhaustive ablation experiments and qualitative results.

If you use the code or dataset from the project, please cite:

@InProceedings{parida2021image,

author = {Parida, Kranti and Srivastava, Siddharth and Sharma, Gaurav},

title = {Beyond Image to Depth: Improving Depth Prediction using Echoes},

booktitle = {Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

year = {2021}

}